Via Inverse, a look at how artificial intelligence (AI) can help conservation:

It’s on us to be better caretakers for this beautiful, warming planet that we (and a few million other species) call home. Thankfully, a computer vision algorithm learned how to do a job that once required the help of tens of thousands of citizen wildlife scientists in a fraction of the time.

The A.I. successfully labeled roughly three million images taken by Snapshot Serengeti, a project whose goal is to preserve biodiversity and seek out new phenomena by more carefully monitoring endangered species by filling the Serengeti with unobtrusive cameras.This is all thanks to team of computer scientists, led by Mohammad Sadegh Norouzzadeh at the University of Wyoming, who together developed an algorithm to analyze the images. 30,000 volunteers had to manually label them. Now, this animal-identifying A.I. published in the journal Proceedings of the National Acamedy of Science allows these citizen scientists to devote their time to conservation endeavors instead of spending hours sorting through photos.

“We can save them time and provide them with information quickly and accurately,” Norouzzadeh told Inverse. “The current process they’re using is very slow so it can give them outdated information. Machine learning can supply up-to-date information so they can plan for conservation efforts. That’s why we think this is such a critical advancement for ecology.”

Getting data to ecologists quickly will allow them to take immediate action to deal with ongoing issues. Norouzzadeh is also confident that his algorithm also won’t take over the need for citizen scientists. Roughly 0.7 percent of the images still need a human’s touch to label because the A.I. can’t tell exactly what’s happening.

,

Read More »Via EurekAlert, a look at the use of drones to survey African wildlife:

A new technique developed by Swiss researchers enables fast and accurate counting of gnu, oryx and other large mammals living in wildlife reserves. Drones are used to remotely photograph wilderness areas, and the images are then analysed using object recognition software and verified by humans. The work is reported in a paper published in the journal Remote Sensing of Environment. (*)

The challenge is daunting: some African national parks extend over areas that are half the size of Switzerland, says Devis Tuia, an SNSF Professor now at the University of Wageningen (Netherlands) and a member of the team behind the Savmap project, launched in 2014 at EPFL. “Automating part of the animal counting makes it easier to collect more accurate and up-to-date information.”

No animal left uncounted

The drones make it possible to cover vast areas economically. But it’s more complicated than simply sending them up to photograph the terrain: more than 150 images are taken per square kilometre. At first glance, it’s hard to tell the animals from other features of the landscape such as shrubs and rocks.

To decipher this mass of raw visual data, the researchers used a kind of artificial intelligence (AI) known as “deep learning”. Conceived by PhD candidate Benjamin Kellenberger, the algorithm enables researchers to immediately eliminate most of the images that contain no wildlife. In the other images, the algorithm highlights the patterns most likely to be animals.

This initial phase of elimination and sorting is the longest and most painstaking, says Tuia. “For the AI system to do this effectively, it can’t miss a single animal. So it has to be have a fairly large tolerance, even if that means generating more false positives, such as bushes wrongly identified as animals, which then have to be manually eliminated.”

The team started by preparing the data needed to train the AI system to recognise features of interest. During an international crowdsourcing campaign launched by EPFL, some 200 volunteers tracked animals in thousands of aerial photographs of the savannah taken by researchers at the Kuzikus wildlife reserve in Namibia.

These images were then analysed by the AI system, which had been trained by scoring based on different types of errors: the AI system is given one penalty point for mistaking a bush for an animal, whereas the penalty for missing an animal completely is 80 points. As a result, the software learns to distinguish wildlife from inanimate features, but especially not to miss any animals. Once the collection of images has been whittled down by the AI system, a human takes over the final sorting, made easier by coloured frames automatically placed around questionable features.

100 square kilometres per week

This semi-automated technique was developed in collaboration with biologists at the Kuzikus wildlife reserve in Namibia. Since 2014, the researchers have periodically flown over the reserve using drones designed and optimised by SenseFly, a Swiss company, and equipped with compact, standard cameras. “In the beginning we were rather sceptical,” says Friedrich Reinhard, director of the reserve. “The drones produce so many images that I thought it would be difficult to use.”

But, thanks to the sorting performed by the AI system, just one person can carry out a full count of the Namibian reserve – an area of around 100 square kilometres – in about a week. In contrast, conventional methods involve entire teams taken aboard a helicopter. These methods are both less accurate and so expensive that they are rarely used – once a year at most in Kuzikus.

The Swiss researchers are continuing their work with the Namibian reserve. EPFL students travel there regularly. The Kenyan authorities have also expressed interest, as has the Veluwe National Park in the Netherlands. Recently appointed professor at the University of Wageningen (Netherlands), Devis Tuia still works closely with the University of Zurich (where he was SNSF Professor) and EPFL, which coordinates the Savmap project.

,

Read More »Via TechCrunch, an interesting report on how one organization is using augemented reality, gamification, and mapping to help improve conservation outcomes:

Wildlife conservation groups have made a lot of strides in raising awareness of animals whose populations or natural habitats are endangered, and what we can do to help. Now a startup out of Kenya is tapping into advances made in augmented reality, mapping and app-based games to further the cause.

Internet of Elephants, a startup based out of Nairobi, is building an app-based game of the same name that lets users learn more about different species of wildlife in Kenya, as well as other countries and regions, by letting users select the animals and “place” them into their real-world environments to follow them around. Users can learn more about the animals through a reference guide in the app, as well as by walking around the physical world and playing games based on the migratory paths of each creature.

Internet of Elephants (a pun on “Internet of Everything”) brings together and tips its hat at a number of innovations in the worlds of mobile and gaming. Niantic’s Pokemon Go has catapulted augmented reality gaming into the mainstream with its premise of finding and “catching” of fictional Pokemon creatures, using the screen on your handset projecting them into your surroundings in real time. Apple and Google have also laid the groundwork for significantly more apps using AR in their feature-set with the respective launches of ARKit and ARCore.

Beyond Pokemon Go, there is a wider group of games that also rely on location and mapping technology built into our devices as part and parcel of the experience, from Zombies, Run! through to the very legacy game of Geocaching (which has actually been around since 2000, played first on GPS devices).

Equally important is that wildlife organizations, like many others, have capitalised on the app revolution both to create content about their work and disseminate it. Many today take it for granted that we can find whatever information we want simply by tapping our little screens, but similarly, now those who have the information know that this is now an essential way to communicate with the wider world, and apps that take you beyond basic reference are the most effective at doing that.

Internet of Elephants brings all of these strands together in its effort to educate consumers about wildlife, and to do so in a more engaging way.

The product is the brainchild of Gautam Shah, an American who came to Kenya originally working in IT consultancy for a large firm. Feeling the pull to start something of his own, and a wildlife enthusiast, he could see the opportunity to build a new business from the ground up to address these two things while also leveraging a rising tech ecosystem in the city.

As TechCrunch saw in our own trip to Nairobi earlier this year, where we organised our first regional Battlefield event independent of a Disrupt conference, the city is one of the thriving hotspots for tech entrepreneurship in sub-Saharan Africa.

Innovations like M-Pesa, the highly successful mobile payment service that acts as a bank account and payment method for those who either do not have a traditional bank account or payment card, got its start in Kenya. Even more than that, it has now become one of the key examples of how sub-Saharan Africa — one of the more underdeveloped regions in the world — is ripe for some of the newest and most interesting innovations hitting the market today to fill the vacuum and help improve people’s lives.

Anchored by Shah, the full team is a mix of Nairobi natives coupled with expats who have moved to the city through past work, as Nairobi is also a popular place from which development and humanitarian organizations run operations. They include some very interesting folks: the product lead, Jake Manion, had spent six years as the creative director for Aardman Animations, the Academy-award winning studio behind Wallace & Gromit and Shaun the Sheep; and the startup has not just a team of technical and product people working on different elements of the game, but another group focused on the content, and specifically how it interfaces with the wider wildlife conservation community.

The conservation community is where Internet of Elephant’s business model comes into play. The company today building its animal kingdom (so to speak) out by way of partnerships with different groups, who can essentially create their own areas within the app, where they help build up the material related to a particular animal, either in terms of those migratory patterns or other background information.

Over time, the idea will be to cover various geographies and different groups, as well as other kinds of organizations that work with animals, such as conservation-minded zoos and preserves.

As with many other mobile games, there are a number of features built in that Internet of Elephants could potentially monetize, both for its own business as well as on behalf of these organizations, such as new levels and migrations, or new animals to unlock, and of course options to donate within the app.

I had a chance to talk to Shah at the Nairobi National Park, a wildlife park just next to the city with the skyline surreally visible at the horizon of the savannah. That juxaposition seemed to make for a fitting location to talk about how Internet of Elephants is using tech to shed more light onto a very non-tech part of our world.

,

Read More »Via Conservify, interesting commentary about their open conservation movement:

Throughout the general discussion around open source, one subject that is not generally mentioned is wildlife conservation. But, as with many things that are new or not-yet-discussed, that doesn’t mean that it doesn’t have a place in there. Open conservation is the where my work is focused, with the hope that we can lower the costs and barriers associated with effective conservation through the use of open source technologies.

I was recently on episode 24 of the OKCast podcast talking about Open Conservation. The OKCast is a weekly open source blog and podcast with “the goal to explore, connect, use and inspire open knowledge projects around the world to develop the public commons, improve organization and government transparency and communication, and advocate for social justice and social activism.” You should certainly listen to some of their previous podcast and follow them on Twitter.

Openness is at the very core of Conservify, since our current closed information management approach and sole reliance on military enforcement are the root causes why we are too slow and expensive to stop wildlife crime. These closed systems mask the true size of the wildlife problem. Communities and nonprofits are begging for these types of solutions and there currently exists almost nothing out there. When conservation information changes from something that only a few can access to an open public good, then the incentives around wildlife crime and overexploitation start to change for the better.

Open source methods have been used in every one of my conservation technology research projects and pilot implementations to date, including a dedication to share developed code on open repositories with open licenses. Arduino-based hardware sits at the core of the ultraVMS prototypes. MPA Guardian was built using Ushahidi and FrontlineSMS. SoarOcean was built through the DIY Drones community and all the associated hardware, tools, flight plans, documentation, and lessons learned will be freely shared on the Conservify and SoarOcean websites. The connected conservation sensor platforms all make use of Arduino or Raspberry Pi devices to manage the sensors with code available on the Conservify repositories. All data from these projects are published with Open Data licenses to allow access and reuse of the sensor readings.

Additionally, the work with the National Geographic funded Okavango Wilderness Project seeks to fundamentally open up the way scientific field expeditions are conducted. In 2014, a team of National Geographic Explorers (including myself) traveled along the Okavango Delta in Botswana, sharing every piece of data we collected including environmental readings, water quality, wildlife sightings, biometrics, and more to any researcher, citizen scientist, artist, student, or interested person that wanted it (through access to the IntoTheOkavango.org API). This is revolutionary because, in the past scientists would go on expeditions and collect data, just to closely guard the data until they can publish it and gain accolades. We were seeking to do exactly the opposite. I am the project technologist and open hardware designer, focused on water and air quality testing and building of prototype environmental monitoring stations based off the Raspberry Pi. For the 2015 expedition, I am building a mesh network of open source environmental sensors to help us measure, in real time, the heartbeat and health of this critical habitat. We are equipping the expedition canoes (mokoros) with connected conservation devices to map environmental data as the three-month expedition travels from the source of the delta in Angola, through Namibia, and into Botswana. The open source hardware and software used for this are part of the baseline that Conservify is built upon.

Conservify’s mission is to seek openness as a means of battling environmental crimes and providing mechanisms for increased cooperation in conservation. Through better management, analysis, and geospatial visualization of this data, we can showcase successes, share challenges, and create a comprehensive global understanding of the health of our ecosystems. Through creating information where there was none before, we can shift the incentive structures around wildlife trafficking and coordinate action around an issue. By listening to the communities most impacted by overfishing and poaching, I realized that we needed to create an open and free way for a concerned citizen, NGO, community, government, academic, or scientist to be able to establish a conservation project and help to collect the data to make the protection of these resources successful. We now have the tools to keep wildlife reserves free from poaching, illegal logging, and pollution by modernizing and opening up the technology to do so.

,

Read More »Via Yale’s e360, a report on how – while the rapid growth of digital data has been a boon to researchers and conservationists – experts are now warning of a dark side: Poachers can use computers and smartphones to pinpoint the locations of rare and endangered species and then go nab them:

Melita Weideman, then a Knersvlakte ranger, had just finished work one July afternoon in 2015 when she was called to check out a mysterious pickup truck parked just outside the reserve. Weideman saw a man and woman walking through the approaching winter sunset toward the vehicle and then noticed empty cardboard boxes on the double-cab’s back seat. “That’s very weird,” she recalls thinking. “It looks like they’re collecting things.”

The couple had no reserve entry permits. When Weideman asked to see inside their backpacks, they initially refused. “It was quite a stressful situation because we [rangers] are not armed, and I didn’t know if they were armed.” But Weideman persisted and the bags were opened, revealing 49 of the the small, cryptic succulent plants that grow between the Knersvlakte’s stones. Jose (aka Josep) Maria Aurell Cardona and his wife Maria Jose Gonzalez Puicarbo, both Spanish citizens, were arrested. A search of their guesthouse room in a nearby town revealed 14 large boxes containing over 2,000 succulents, including hundreds of specimens of threatened and protected species, courier receipts showing that many more had already been sent to Spain, and notes documenting their extensive collecting trips across South Africa and neighboring Namibia.

Authorities soon discovered that the couple had been selling poached plants through their anonymously-operated website, www.africansucculents.eu, and calculated the value of the plants in their possession at about $80,000. After 16 nights in nearby jails, Cardona and Puicarbo accepted a plea bargain, paid a $160,000 fine — the largest ever for plant thieves in South Africa — and were banned from the country forever.

Cardona and Puicarbo’s ill-fated trip had been meticulously planned using information sourced online. They were carrying extracts from data lists and books about threatened plants, electronic scientific journals describing new species, messages from botanical listservs and social networks, pages from a digital archive of museum specimens named JSTOR Global Plants, photos and information from citizen science website iSpot, detailed maps from off-road vehicle websites, and hundreds of tabulated GPS waypoints for rare plant locations apparently downloaded from the Internet.

Twenty or more years ago it would have taken dozens of long field trips and thousands of miles of travel to acquire this volume of detailed information about southern Africa’s rare succulents; an entire botanical career, perhaps. In 2015, a pair of poachers could acquire it in a short time from a desk on another continent.

The Cardona-Puicarbo case provides rare insight into an emerging problem: The burgeoning pools of digital data from electronic tags, online scientific publications, “citizen science” databases and the like – which have been an extraordinary boon to researchers and conservationists – can easily be misused by poachers and illegal collectors. Although a handful of scientists have recently raised concerns about it, the problem is so far poorly understood.

Today, researchers are surveilling everything from blue whales to honeybees with remote cameras and electronic tags. While this has had real benefits for conservation, some attempts to use real-time location data in order to harm animals have become known: Hunters have shared tips on how to use VHF radio signals from Yellowstone National Park wolves’ research collars to locate the animals. (Although many collared wolves that roamed outside the park have been killed, no hunter has actually been caught tracking tag signals.) In 2013, hackers in India apparently successfully accessed tiger satellite-tag data, but wildlife authorities quickly increased security and no tigers seem to have been harmed as a result. Western Australian government agents used a boat-mounted acoustic tag detector to hunt tagged white sharks in 2015. (At least one shark was killed, but it was not confirmed whether it was tagged). Canada’s Banff National Park last year banned VHF radio receivers after photographers were suspected of harassing tagged animals.

While there is no proof yet of a widespread problem, experts say it is often in researchers’ and equipment manufacturers’ interests to underreport abuse. Biologist Steven Cooke of Carleton University in Canada lead-authored a paper this year cautioning that the “failure to adopt more proactive thinking about the unintended consequences of electronic tagging could lead to malicious exploitation and disturbance of the very organisms researchers hope to understand and conserve.” The paper warned that non-scientists could easily buy tags and receivers to poach animals and disrupt scientific studies, noting that “although telemetry terrorism may seem far-fetched, some fringe groups and industry players may have incentives for doing so.”

It is difficult to tap into most tag data streams, say experts. Accessing an unencrypted VHF signal from a relatively cheap radio tag requires knowledge of its exact frequency, although this can sometimes be found with a scanner. Data from more-expensive GPS tags are usually encrypted and password-protected. “I’m not saying it’s impossible to hack into a tag signal,” says one African technical expert who declined to be named because he sells GPS tags for rhinos and elephants, “but you would need extremely high-level knowledge and equipment. I don’t know of any cases in Africa.”

A more serious risk, experts say, is posed by the voluminous geospatial information in Internet-accessible databases like those being created by museum staffers who are archiving millions of digital photos of plants and animal specimens, each with a location attached. In addition, huge “citizen science” projects are leveraging millions of volunteer hours to build species databases bulging with geospatial datapoints and geo-referenced photographs, audio, and videos.

These data stores of species’ locations are an irreplaceable and growing asset to science and conservation, enabling researchers to pinpoint threats to endangered species, observe ecosystem responses to climate change, and even uncover new species. Many are designed to be open and easily accessible, which has multiplied their value and dramatically lowered research costs.

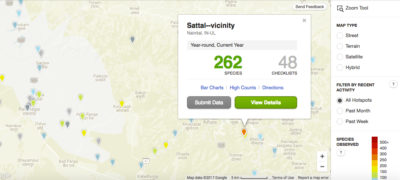

Geotagged bird sightings in northern India, mapped by the citizen science website eBird.

eBird, based at Cornell University, is one of the world’s most successful “citizen science” wildlife mapping projects. It has a quarter of a million registered users globally who have uploaded hundreds of millions of observations from almost every country. By “gameifying” birding to leverage birders’ competitive instincts, eBird has built a highly productive community of volunteer data collectors who have enabled scientists to identify threats to birds and understand bird movement in unprecedented ways.

Like many other citizen science projects, eBird was deliberately developed to encourage data sharing. Contributors can share and download lists of bird locations and find millions of sightings on digital maps. It’s so open, says project leader Marshall Iliff, that “anyone can basically download the entire eBird dataset.”

When eBird launched, Iliff says, the idea that its data could be used to harm birds was far from its developers’ minds, because few North American species are seriously threatened by illegal hunting or capture. As the project has expanded into countries where more birds are threatened by such activities, however, staff have realized that some species’ data should be hidden. But this is no simple task: Since eBird’s edifice was built from the ground up to be maximally accessible, Iliff says, hiding data is “a challenging thing to work out both on the technical and the policy sides.” After much deliberation, the platform’s code is now being extensively rewritten so selected species’ locations can be kept from public view.

While few North American birds may be endangered by releasing their geospatial data, this is not true for many small, lesser-known species in the developing world. A shadowy international community of collectors pays well for rare succulent plants, orchids, reptiles, spiders, and insects, often found where wildlife law enforcement is patchy. The more obscure and rare a species is, the more valuable. Rarity makes species vulnerable to being completely wiped out by poachers; some targeted South African plants and insects are found only in a few acres.

Paul Gildenhuys, who heads the biodiversity crime unit in South Africa’s Western Cape Province, tells me that in the past, many poachers were academics looking for a few specimens for themselves. Now more profit-focused international traders have entered the scene, he says, “and they really don’t care. If they find a lizard colony, they won’t just take one or two animals, they’ll bring crowbars to smash rocks so they can take the whole lot.”

Collectors scour scientific journals for descriptions of new species, which traditionally include their locations. Many new species have been poached within months of being described, which recently inspired David Lindenmayer and Ben Scheele of the Australian National University to write a strongly worded article in Science titled “Do not publish.” Pointing out that academic journals are rapidly embracing online open-access publication, they called on their colleagues to “urgently unlearn parts of their centuries-old publishing culture and rethink the benefits of publishing location data and habitat descriptions for rare and endangered species so as to avoid unwittingly contributing to further species declines.” In a reply titled “Publish openly but responsibly,” another group of biologists implicitly accused Lindenmayer and Scheele of overreacting, saying that existing institutional data policies were sufficient to protect species. “Conservation biologists can … ensure data are available through secure sources for approved purposes,” they wrote.

But how are “approved purposes” defined, and by whom? And which species truly require data redaction? Biologists disagree sharply. Some believe that all data from Red-Listed species should automatically be withheld; others point out that many Red-Listed species are not threatened by poachers, but by habitat destruction or climate change. Some institutions and governments currently have biodiversity data policies, but many have no policy at all. There is no internationally-agreed protocol for deciding which data to hold back and when to release it.

While biologists can control location data in their own journals and archives, they can’t control the sprawling, dynamic world of social media, where enthusiasts share wildlife notes and photos in an ever-growing galaxy of online groups. Typical of these is Snakes of South Africa, a thriving, conservation-focused Facebook group where anyone can share photos of snakes for volunteer experts to identify. The group helps find handlers to relocate snakes without harm and even assists with snakebite medical advice. Group administrator Tyrone Ping tells me that poachers often pretend to be helpful experts to learn locations of valuable snakes. “We throw them out, but they join again with a fake profile.” (I recently observed a member of a European Facebook group openly explain where to find a desired snake species in Egypt and how to smuggle it through Cairo International Airport.)

And conservation officials say tourists’ social media posts can also pose a risk. More than 1,000 rhino have been poached annually in South Africa since 2013, and a wildlife crime investigator based near Kruger National Park tells me that poachers scan social media for tourists’ photos of rhinos, which are often tagged with locations or contain identifiable landscape features. Poachers’ raids are planned with Google Maps and co-ordinated via WhatsApp. Many African parks are asking visitors not to post rhino photos, but there’s no practical way to stop them.

A growing number of mobile apps are designed specifically to allow tourists to share locations and photos of animal sightings with a network of fellow park visitors. Nadav Ossendryver built Latest Sightings — the most popular of these — as a 15-year-old in 2011 after a frustrating trip to Kruger National Park during which he couldn’t find “good” animals. “I kept thinking someone must be looking at a leopard or a lion, and it must be close by,” he says. Today the app has over 42,000 active members. Ossendryver tells me he’s promoting conservation to a younger audience, and his app does not log rhino sightings. But Kruger’s management is nonetheless strongly against Latest Sightings: App users, they say, are speeding toward reported sightings, sometimes road-killing animals and causing traffic congestion that interferes with natural animal behavior.

In the world of science, however, some researchers remain wary of moves to withhold data. “Science depends on the transparency of information,” says Vincent Smith, a research leader in informatics at London’s Natural History Museum. “Geospatial information is some of the most valuable data we have. To remove it would remove the opportunity to do enormous amounts of research. It would seriously harm all science.”

Tony Rebelo, a South African biologist and supporter of iSpot, a crowd-sourced online archive, says that to some extent policies on withholding information are irrelevant because “once you give your data to anyone, no matter how trusted, it’s out there.” It’s also hard to track and predict the fickle collectors’ market. A species can suddenly become desired years after its location has been deemed safe to publish. Many researchers I interviewed had been contacted by fake biologists seeking data on rare species.

One case bolsters the claim that hiding geospatial data can protect species. In 2009, Tim Davenport, the Wildlife Conservation Society’s program director in Tanzania, discovered an attractive new snake species in a small forest in that country. He named it Matilda’s Horned Viper, Atheris matildae, after his daughter. Recognizing that its tiny natural range made it vulnerable to poachers, he formally described it in 2011 without publishing its location, which was unusual at that time. Davenport says that although he has seen Atheris matildae advertised online, every case he has followed up involves a seller passing off a similar, common species. Hiding its locality seems to have worked.

,

Read More »Via Vice’s Motherboard, an interesting look at how smart lakes and smart forests are helping researchers understand our impact on nature:

A bright yellow platform the size of a jet ski bobbed on Lake George as IBM research engineer Mike Kelly climbed aboard. Unlike the other tourists at the popular vacation spot in upstate New York, Kelly wasn’t there for a break; he was checking on sensors that transform the waterway into a “smart lake.”

The sensor rig he’d boarded was monitoring pollution, including road salt. Thick cakes of salt dumped on upper New York roads during snowstorms inevitably wash into Lake George each spring with the snowmelt, encouraging the proliferation of invasive species and making the otherwise strikingly clear waters dark and murky.

Kelly popped open a panel to show me the pulley that sends sensors into the depths of the lake. A mechanism inside was set to drop the sensors deep into the lake at the top of each hour, and reel them back in. Soon after he opened the panel, a pulley system began whirring like the spool of a mechanized fishing rod. The wire holding the sensors slid through a one-foot-wide hole in the platform’s metal grating, and I looked into the suede-blue water, wondering what types of life were getting scanned 200 feet below my soggy shoes.

Lake George isn’t the only natural spot to get a technological upgrade. Forests are also being put under the microscope to see how they develop at Harvard Forest, a sensor-laden forest monitored by Harvard University. Oregon State University analyze songbirds’ chirping, treating certain noises as a “canary in a coal mine” for larger ecological issues.

That type of technology is helpful for developed cities to better manage their natural resources, but it could be life-saving for developing regions where data on water quality is less reliable. Harry Kolar, a researcher at IBM, said the long-term vision is to sell some of these sensor units to NGOs and researchers in developing nations so they can have the data to begin addressing those problems. It will only become a bigger issue as climate change reduces the world’s available clean water.

“Managing resources such as water quality in general is becoming a bigger problem across the world and has been for a while,” he said.

At Lake George, aquatic sensors are automatically dipped into the lake every hour to take measurements, including oxygen levels, pH, and salinity. They stay on the lake throughout most of the year (except when the lake freezes over in the winter) as part of the Jefferson Project at Lake George, a research collaboration between Rensselaer Polytechnic Institute, IBM, and The FUND for Lake George.

Larry Eichler of RPI, a university in upstate New York, has been studying the lake for decades. He told me these sensors, the first of which was put on the lake in March, collect as much data in a week as he collected in 30 years of taking data by hand. Three rigs have installed 265 sensors on the lake, including one platform with sensors researchers can talk to in real-time, rather than having to pre-program. And if they pick up something interesting, such as a spike in a pollutant, they’re programmed to do additional scans automatically.

Eventually, the researchers said the sensors will be able to send an email or text message to researchers, water plant operators, and city officials in the event of a major issue, such as a toxic algae bloom or a hazardous waste spill. All of that better informs experiments—and in theory could advise local legislation.

On another rainy day in upstate New York, RPI professor Rick Relyea led me to a field full of neon-blue plastic kiddie pools and black cattle troughs. Inside the 400 or so containers were water from the lake, plus nearly every species of plant and animal that live in the lake.

This is the end stage of the smart lake experiment, part of the Jefferson Project. It collects data, modeling software predicts problems, and the kiddie pools serve as tiny lakes for experimental confirmation. “Here we can tell you what the future will be,” Relyea said.

The sensors are picking up more salt and more invasive snails? Throw that type of salt and those species into a pool with lake water and see what happens. (The calcium in one type of road salt, calcium chloride, helps invasive snails build shells easier, helping them take over.)

And if a city wants to try out a new type of road salt, they can test it here to make sure it isn’t going to cause the water to go murky or the fish to die, avoiding lost tourism dollars, crashing housing prices and boatloads of lawsuits.

As for Lake George’s future, data could protect its beauty and thriving ecosystem.

“It’s not too far gone,” Relyea said. “Changes can be made to turn it around if those changes are informed by science.”

,

Read More »