With California already suffering through a devastating drought, another water catastrophe has emerged: The state is quickly depleting its groundwater reserves.

Reservoirs, creeks, and rivers usually supply a large portion of California’s water for drinking and irrigation. With that water in short supply, farmers pump groundwater by drilling wells. Because of the drought, groundwater is now furnishing close to 70 percent of the state’s water, up from 40 percent in a typical year, according to Jay Famiglietti, a hydrologist at the University of California, Irvine.

“We’re poised for a major run on groundwater,” he said, noting that water restrictions have sparked a well-drilling frenzy in some regions, leading people to drain groundwater faster than it can be replaced. A state report released in May shows that groundwater levels have hit record lows since 2008.

Worse, California lacks a statewide system to regulate groundwater pumping. But that could soon change. State lawmakers have approved a bill requiring local agencies to develop groundwater-management plans, and the state will release a draft blueprint later this year—which could lead to the monitoring and reporting of groundwater withdrawals, restrictions on how much farmers can pump, and fines for pumping too much.

The key to enforcing the law: satellites. They’re already giving scientists a bird’s-eye view of underground water resources—and could be central to providing the state with detailed information about how much groundwater remains and how much water farms consume.

“We need more detailed information to actively manage the water basins,” said Lester Snow, executive director of the California Water Foundation in Sacramento and a former director of the California Department of Water Resources.

Since 2003, Famiglietti has been using data from GRACE, a joint U.S.-German satellite mission, to monitor groundwater depletion from space. The data show that water levels in the San Joaquin and Sacramento river basins were at their lowest levels in a decade by December 2013.

The Central Valley’s reserves are shrinking by 800 billion gallons a year. Famiglietti also sees close correlations between surface water allocations and groundwater reserves. When allocations are high, groundwater levels recover, but when they’re restricted—as they are now—“groundwater is hit very hard,” he said.

GRACE can give California an overview of its groundwater resources, but it can’t tell officials how much groundwater each farm uses.

Groundwater used for irrigation ends up in one of three places: It evaporates from the ground, is soaked up by growing plants, or trickles into the soil to recharge the groundwater. Plants also release some of the water as vapor (called transpiration) during photosynthesis.

Although a water meter attached to a groundwater pump can track how much water a farmer withdraws from the ground, it doesn’t show how much is returned to the aquifer or lost to the air by evaporation or transpiration.

Satellites can also provide detailed information about this water loss—how much water agricultural producers withdraw permanently from the system. Richard Allen, a water resources engineer at the University of Idaho, uses images of vegetation and temperature from a Landsat satellite to calculate rates of evapotranspiration and estimate water use. He feeds the information into a computer model called METRIC (Mapping Evapotranspiration at High Resolution With Internalized Calibration), which allows him to see how much water is being consumed from one field to the next.

METRIC has been used in Idaho to quantify groundwater consumption from the Snake River Plain aquifer, which supports two million acres of irrigated land. Allen said METRIC helps monitor and manage water rights, identify illegal pumping, and provide better information about consumption.

“If farmers were asked to pay for groundwater, METRIC could give an accurate assessment of pumping over the course of a growing season,” said Allen. “We could send you an image that shows you how much water you used.”

Although there’s interest in California to include satellite-based measurements of groundwater and its consumption, bridging the gap between research and statewide operation takes time. But Famiglietti remains optimistic. “The results we have been getting are good, and they’re only going to get better,” he said.

California’s Water Crisis Is Getting Worse: Can Satellite Policing Help?

August 23rd, 2014

Via Take Part, an article on how the use of satellite technology could help California manage its remaining groundwater supplies:

,

Read More »GPS: Tracking West’s Vanishing Water

August 23rd, 2014

Via National Geographic, a report on the use of Global Positioning System (GPS) stations to track drought in the western USA:

Throughout the western United States, a network of Global Positioning System (GPS) stations has been monitoring tiny movements in the Earth’s crust, collecting data that can warn of developing earthquakes.

To their surprise, researchers have discovered that the GPS network has also been recording an entirely different phenomenon: the massive drying of the landscape caused by the drought that has intensified over much of the region since last year.

Geophysicist Adrian Borsa of the Scripps Institution of Oceanography and his colleagues report in this week’s Science that, based on the GPS measurements, the loss of water from lakes, streams, snowpack, and groundwater totals some 240 billion metric tons—equivalent, they say, to a four-inch-deep layer of water covering the entire western U.S. from the Rockies to the Pacific. (Related: “Water’s Hidden Crisis”

The principle behind the new measurements is simple. The weight of surface water and groundwater deforms Earth’s elastic crust, much as a sleeper’s body deforms a mattress. Remove the water, and the crust rebounds.

As the amount of water varies cyclically with the seasons, the crust moves up and down imperceptibly, by fractions of an inch—but GPS can measure such small shifts. (Related: “California Snowpack Measure Shows No End in Sight for Drought”)

Borsa knew all this when he started to study the GPS data. He wasn’t interested in the water cycle at first, and for him the seasonal fluctuations it produced in the data were just noise: They obscured the much longer-term geological changes he wanted to study, such as the rise of mountain ranges.

When he removed that noise from some recent station data, however, he noticed what he describes as a “tremendous uplift signal”—a distinct rise in the crust—since the beginning of 2013. He showed his findings to his Scripps colleague Duncan Agnew.

“I told him, ‘I think we’re looking at the effect of drought,'” Borsa remembers. “He didn’t believe me.”

The Dry Land Rebounds

But Borsa was right. As he, Agnew, and Daniel Cayan of Scripps report in Science, the recent uplift spike is consistent across the U.S. West, and consistent with recent declines in precipitation, streamflow, and groundwater levels. With a great weight of water removed, the crust is rebounding elastically across the whole region.

The median rise across all the western GPS stations has been four millimeters, just under a sixth of an inch. But the Sierra Nevada mountains, which have lost most of their snowpack, have risen 15 millimeters—nearly six-tenths of an inch.

The GPS data complements satellite observations from NASA’s ongoing Gravity Recovery and Climate Experiment, or GRACE. The GRACE satellites measure small changes in the Earth’s gravity field caused by the movement of water on and under the Earth’s surface, allowing researchers to estimate groundwater and soil moisture conditions around the world. GRACE can operate where GPS networks don’t exist—much of Africa and South America, for instance.

But where it’s available, as in the western U.S., GPS data can provide a more rapid and detailed picture of drought and its causes.

“We only see the big picture,” says Stephanie Castle, a water resources specialist at the University of California, Irvine, and the lead author of a recent study that used GRACE data to quantify groundwater loss in the Colorado River Basin. “The uplift data can point out more specifically where the depletion is happening.”

Where the Water Goes

This new precision has big political implications: With more than 99 percent of California still in a severe drought, and rights to its surface water severely overallocated even in a good year, many of the state’s farmers are supplementing their water supplies by pumping more water from underground aquifers.

In the Central Valley so much groundwater has been extracted that the ground has subsided more than 30 feet in some places—swamping the much smaller regional uplift caused by the elastic rebound of the underlying crust.

California has some of the weakest groundwater regulations in the nation, and access to its well-drilling records is highly restricted. The GPS data isn’t detailed enough to point fingers at individual farmers, but its 125-mile resolution is good enough to identify especially profligate regions.

As climate change worsens water stress throughout the American West and beyond, such knowledge may well be vital. Borsa and his colleagues started out trying to filter the noise of the water cycle out of the GPS data; they ended up showing that the GPS network could help reveal what’s really going on with water.

“All of a sudden we’ve turned the whole thing around,” Borsa says. “It’s a huge change, and it makes the network useful to whole new branches of scientists and managers.”

,

Read More »Wired In The Wild

August 21st, 2014

Courtesy of Foreign Affairs, a detailed look at the impact that technology is having upon conservation:

Wired in the wild: an elephant with a tracking collar, Mozambique, November 2007.

Wired in the wild: an elephant with a tracking collar, Mozambique, November 2007.

Conservation is for the first time beginning to operate at the pace and on the scale necessary to keep up with, and even get ahead of, the planet’s most intractable environmental challenges. New technologies have given conservationists abilities that would have seemed like super powers just a few years ago. We can now monitor entire ecosystems — think of the Amazon rainforest — in nearly real time, using remote sensors to map their three-dimensional structures; satellite communications to follow elusive creatures, such as the jaguar and the puma; and smartphones to report illegal logging.

Such innovations are revolutionizing conservation in two key ways: first, by revealing the state of the world in unprecedented detail and, second, by making available more data to more people in more places. Like most technologies, these carry serious, although manageable, risks: in the hands of poachers, location-tracking devices could prove devastating to the endangered animals they hunt. Yet on balance, technological innovation gives new hope for averting the planet’s environmental collapse and reversing its accelerating rates of habitat loss, animal extinction, and climate change.

CELL PHONES FOR ELEPHANTS

In 2009, I visited the Lewa Wildlife Conservancy, in northern Kenya. A cattle ranch turned rhinoceros and elephant preserve, Lewa has become a model for African conservation, demonstrating how the tourism that wildlife attracts can benefit neighboring communities, providing them with employment and business opportunities. When I arrived at camp, I was surprised — and a little dismayed — to discover that my iPhone displayed five full service bars. So much for a remote wilderness experience, I thought. But those bars make Lewa’s groundbreaking work possible.

Since the mid-1970s, people have been consuming more resources than the planet’s natural capital can replenish.

More than a decade earlier, Iain Douglas-Hamilton, who founded the organization Save the Elephants, had pioneered the use of GPS and satellite communications to study the movements of elephants. At Lewa, Douglas-Hamilton outfitted elephants with tracking collars that connect to the Safaricom mobile network as easily as my cell phone did. These connections allow Lewa’s researchers to effectively call the tracking collars of the conservancy’s elephants and download their location data on demand, all the while plotting their migration between Lewa and the forests flanking Mount Kenya.

Today, Lewa uses the collars for more than research, piloting a program to reduce human-elephant conflict that results when elephants raid crops and to provide safer passage for elephants when they move through agricultural and other settled areas. Using accumulated data on elephant migration routes, the conservancy identified and protected ideal migration corridors. It even constructed a highway underpass to reduce the risk of elephants colliding with cars. Lewa also straps tracking collars on problem elephants with a history of raiding crops. If one of the elephants approaches a farm or village, its collar sends a text message to wildlife rangers, who can then quickly locate the animal and move it away in order to prevent any damage. True to their reputation for intelligence, the elephants quickly learn to mind such virtual fences and keep clear of farms.

The Lewa project shows how a relatively simple, low-cost tracking device can transform wildlife conservation. Using data from such devices, conservationists can shape protected areas around predictable migratory patterns — avoiding needless, often fatal confrontations between endangered species and human civilization. For example, Magellanic penguins that forage along the coast of Argentina have long been vulnerable to running into oil when they swim through shipping lanes. Once covered in oil, most penguins struggle to maintain their body temperature and die of hypothermia, and the survivors suffer from health and reproductive problems. In the mid-1990s, P. Dee Boersma, one of the world’s foremost authorities on penguin conservation, discovered that Argentina’s oil pollution was killing as many as 40,000 penguins each year. She used GPS tracking devices, at a time when the technology was on the cutting edge and costly, to document where the birds were foraging. She then worked with Argentinian authorities to move the shipping lanes further offshore, dramatically reducing a mortality rate that could have easily led to the penguins’ extinction.

Tracking collars such as those used on Lewa’s elephants or the Magellanic penguins can cost as much as $5,000 each. But Eric Dinerstein, a leading scientist at World Wildlife Fund, has collaborated with engineers at a cell-phone company to make a GPS tracking device that can be manufactured for less than $300. The use of stronger and smaller components has also made it possible to tag and track a wider variety of species, from jaguars in dense jungle to albatross soaring over the open ocean.

COUNTING BY TREES

The tropical forests of the Amazon and the Congo are among the last of the planet’s vast wildernesses. They are menageries of innumerable species. And they act as the planet’s lungs, inhaling carbon dioxide — an overabundance of which causes climate change — and exhaling oxygen. For this reason, scientists know that forest conservation is an immediate and effective strategy for slowing climate change. Estimates suggest that the cutting and burning of tropical forests accounts for around ten percent of the carbon emissions that are heating the climate.

In 2005, the UN Framework Convention on Climate Change created REDD+, a program to compensate developing countries that reduce their overall carbon emissions through forest conservation. The system recognizes only huge-scale conservation achievements that can be measured and verified — often ten million acres or more. Hundreds of millions of donor dollars are now earmarked for countries that can estimate the avoided carbon emissions across vast areas with high levels of mathematical rigor. But such schemes have consistently run into one big technical stumbling block: it has been difficult to precisely measure, report, and verify the emissions-reduction benefits of variable stretches of forest. Early, labor-intensive methods of measuring a forest’s capacity to store carbon relied on satellite imagery to measure the overall area and ground surveys to size up individual trees in sample plots. The process was costly, and the accuracy of the estimates varied.

Scientists are now using remote sensors, laser-imaging technologies, and advanced statistical algorithms to see both the forest and the trees at the same time and in extraordinary detail — right down to the chemical signatures of individual trees. As their costs decline and their precision increases, these forest-scanning methods could unlock billions of dollars for innovative conservation programs that can prove their carbon, social, biodiversity, and even financial values.

Greg Asner, an ecologist at Stanford University and the Carnegie Institution for Science, is the leading pioneer of such forest-surveillance systems. His most recent project builds on a technology called “light detection and ranging” (LIDAR). Mounted on a small airplane, the system beams powerful lasers through tree canopies to the forest floor, which bounce back carrying highly detailed data about the structure of the forest. Asner’s plane also carries a separate set of hyperspectral sensors that can recognize a range of seemingly invisible characteristics, including a tree’s photosynthetic pigments, its basic structural compounds, and even the water content of its leaves. Researchers can use this data not only to estimate carbon storage capacities but also to analyze forest diversity and assess tree health. With a single airplane, these paired technologies can scan over 120,000 acres, or as many as 50 million trees, in a single day. And the equipment is so sophisticated that it can distinguish among 200 different tree species. Scientists are already integrating information gathered by the LIDAR system with national forest inventories in Nepal, Panama, Thailand, and dozens of other countries to establish their forests’ base lines of carbon storage. Diplomats at the United Nations and the World Bank recently agreed on the key rules for estimating the carbon storage capacity of individual forests, anticipating a coming wave of climate-mitigation finance.

Researchers are also making use of high-resolution satellite imagery. In 2012, the biologist Michelle LaRue reported the findings of her satellite-based census of emperor penguins in Antarctica. Using high-resolution images collected by the QuickBird satellite, LaRue was able to count individual birds over huge areas of ice. Her study, the first of its kind, discovered seven previously unknown colonies and estimated the global population of emperor penguins at nearly 600,000 individuals — nearly 50 percent greater than previous estimates compiled from various ground-based observations of accessible penguin colonies. More accurate population assessments such as these will enable conservationists to verify whether conservation efforts are succeeding and target scarce resources toward the species in the greatest danger.

We can now monitor entire ecosystems — think of the Amazon rainforest — in nearly real time.

In a similar vein, the geologist John Amos, who founded the activist organization SkyTruth, now uses satellite imagery and digital mapping to document the environmental impact of mining, oil and gas development, and illegal fishing. He compiles series of satellite images of a single area to create animated visualizations of changes to the landscape over time. His findings have helped quantify the damage done by mountaintop removal, mining, and fracking. Amos’ analysis of satellite imagery of the Deepwater Horizon oil spill in 2010 accurately estimated that the spill was far larger than official reports initially claimed. And advanced imaging technologies are now enabling SkyTruth to detect and document illegal fishing activity in remote waters, which will aid enforcement efforts.

CAPITAL INVESTMENTS

Humanity’s survival depends on the planet’s stores of natural resources: its fish, water, wood, minerals, and arable land. But the replenishment of these goods depends on the world’s natural capital: its forests, grasslands, topsoil, lakes, rivers, and oceans. Increases in agricultural productivity and the expansion of critical infrastructure have improved the lives of billions of people but have left this natural capital dangerously depleted. As the overall demand for goods and services has continued to grow, human consumption of natural resources has become unsustainable. Since the mid-1970s, people have been consuming more resources than the world’s natural capital can replenish and producing more pollution than it can absorb. In 2010, the nonprofit organization the Global Footprint Network calculated that humanity now requires roughly 1.5 earths to sustain its current level of consumption each year. Put another way, humanity now uses up a year’s supply of the earth’s natural resources by mid-August. After that, it is drawing down against the future capacity of natural capital.

The Natural Capital Project, a joint venture of World Wildlife Fund, Stanford University, the Nature Conservancy, and the University of Minnesota, has generated the technology needed to manage natural capital and predict how changes in land management, infrastructure, and resource use will affect levels of water, timber, and fish, as well as natural defenses against floods and erosion. The project’s flagship technology is an open-source software package called InVEST, which uses relatively simple data inputs to produce maps, trend lines, and balance sheets that measure natural capital. These analyses can inform land-use, development, and conservation decisions by making the potential tradeoffs of various options clearer. InVEST calculates and visualizes how development choices will affect natural capital and, with it, the flows of goods and services to people from the environment.

Today, the Chinese government is using InVEST to establish a national network of “ecosystem function conservation areas” that will balance the potential environmental toll of development with targeted conservation projects. China plans to use the software to restrict development in designated areas that will cover about 25 percent of the country. InVEST is enabling Beijing to identify which areas can provide the biggest returns in natural capital — helping it avoid erosion, conserve water resources, prevent desertification, and protect biodiversity.

In 2011, World Wildlife Fund and the Indonesian government used the software to quantify the impact of different land-use and development scenarios on the forest ecosystems of Sumatra. Their studies compared the costs and benefits of alternative development plans by taking into account the carbon storage potential of local forests, the habitat for tigers, and the fresh water supply. Following this study, the Millennium Challenge Corporation recommended that InVEST be used to guide $332 million in environmental investments under a $600 million U.S.-Indonesian compact.

The private sector is using similar software, with many companies recognizing that their long-term profitability hinges on a sustainable supply of water, agricultural commodities, and other renewable resources that form the bases of their supply chains. For example, Coca-Cola and World Wildlife Fund recently renewed a strategic partnership that includes goals for incorporating natural-capital considerations into how Coca-Cola uses water and sources the commodities in its products. A number of considerations informed these goals, including data collected to better understand how Coca-Cola uses water throughout its supply chain and manufacturing processes. To date, Coca-Cola has improved its systemwide water efficiency by more than 21 percent, in addition to setting a goal to sustainably source key agricultural ingredients, such as cane sugar, corn syrup, and palm oil, by 2020. One of the company’s first major steps toward sustainable sourcing was its work in helping create Bonsucro, which sets global standards for sustainable sugar-cane production. In 2011, a sugar mill in São Paulo, Brazil, became the first to achieve Bonsucro certification, and Coca-Cola was the first buyer of the mill’s certified sugar.

FILLING HOLES

Biotechnology may have the most far-reaching and controversial implications for conservation. De-extinction — the notion that extinct species could be reconstituted from remnants of their DNA — has garnered significant media attention in recent years, as genetic sequencing and cloning technologies have made such a lofty goal appear ever more plausible. To paraphrase the author Stewart Brand, whose Revive & Restore initiative is working to bring back the passenger pigeon, extinction punches holes in the fabric of nature; de-extinction creates the opportunity to fill those holes, restoring biodiversity and making ecosystems more resilient.

Two of the most talked-about candidates for revival are the thylacine, a marsupial tiger that was hunted to extinction in Tasmania in the 1930s, and the North American passenger pigeon, which was once the world’s most abundant species but disappeared due to excessive hunting and the clearing of its forest habitat. Scientists have already brought back an odd species of mouth-brooding frog in Australia that swallows its own eggs and regurgitates its offspring when they hatch. Now, scientists could reconstitute many more species by replacing the DNA of closely related creatures with DNA from extinct species.

Extinction punches holes in the fabric of nature; de-extinction creates the opportunity to fill them.

But reconstituting some extinct species is only the beginning. Synthetic biology is an emerging discipline that applies the tenets of engineering to modify, redesign, and even construct bacteria for specific purposes. Biologists are using advanced techniques in genetic sequencing and bioengineering to rewrite genetic code, manipulate the fundamental building blocks of biological functions, and assemble them within microbes that behave like tiny machines. Sophisticated equipment that was once available only to professional researchers in well-funded laboratories is now accessible to entrepreneurs in garage start-ups and to do-it-yourself hobbyists in community laboratories. This accessibility is rapidly accelerating the pace of innovation and expanding the scope of potential applications.

Synthetic biology could radically expand the possibilities for conservation. Consider, for example, the problem of desertification and land degradation. Every year, about 30 million acres of land succumb to desertification, rendering that land less productive for farming and threatening biodiversity. Around the world, as many as 5.4 billion acres of land — an area larger than the size of South America — have been degraded by land clearing, soil erosion, or unsustainable farming practices. Rehabilitating that land could help farmers meet rising food demands and could restore natural habitats. But it takes far longer to naturally revive soils and regrow natural vegetation than it takes to destroy them — and the planet cannot afford to wait.

Synthetic biologists are already seeking a faster solution. As part of the 2011 International Genetically Engineered Machine Competition, Christopher Schoene, a doctoral candidate in biochemistry at Imperial College London, came up with a way to use synthetic biology to accelerate the rehabilitation of damaged lands. Working with a small group of fellow students, Schoene designed a project to engineer microbes that could find plant roots and stimulate their growth to improve their absorption of water and essential nutrients. If such advances progress past the proof-of-concept stage, they could slow or counteract land degradation, since roots hold soil in place.

Of course, many important questions about the risks of such technologies have yet to be answered. Engineered microbes could have many negative impacts on natural organisms outside the laboratory. They could evolve such that their intended functions diminish or adapt in ways that pose unintended consequences. Nevertheless, the fundamental technologies are coming into place. Synthetic biology, as it advances, could be used to supercharge nature, helping it bounce back faster and stay stronger in the face of human pressures.

RISKY BUSINESS

Excitement about the possibilities of technology must be tempered by a recognition of their risks. For example, an explosion of wildlife crime in Africa is resulting in the slaughter of hundreds of rhinoceros for their horns and thousands of elephants for their tusks. Technology is helping conservationists defend against the poachers through improved monitoring and surveillance. But the information that conservationists are using for good could present a significant risk if it fell into criminal hands. Data security thus becomes as important as physical security in the bush.

Biotechnology applications could have any number of unintended consequences. Efforts to revive extinct species are controversial because they could create a moral hazard, making extinction risks seem less urgent. Some critics of biotechnology warn of a Jurassic Park scenario, in which a genetically engineered organism escapes from the lab and wreaks ecological havoc in the natural world. Such a fear is not unjustified given the economic and ecological damage invasive species have caused when introduced in places where they have few competitors or predators. Costly examples include the zebra mussel, which clogs water intakes in the Great Lakes, and the chestnut blight that nearly wiped out the American chestnut tree at the turn of the century. Synthetically engineered genes could also spread from modified plants or bacteria to other species, resulting in unexpected consequences: such a gene transfer is one of the primary mechanisms behind the rapid spread of antibiotic resistance among bacteria. Current evidence suggests that so-called horizontal gene transfers among genetically modified organisms are infrequent. Nonetheless, bioengineers will need to adopt safety measures to manage and diminish such risks. And scientists need to deepen their understanding of the ecology of engineered organisms and how they interact with other species in the environment.

Some of the most promising applications of technology to conservation may also be limited by a lack of basic infrastructure. Although I enjoyed excellent cell-phone connectivity in northern Kenya, many of the world’s most important natural areas remain wild and off the grid. That means technologies that rely on connections to telecommunications and power networks won’t work there — at least until those networks expand. The proliferation of more affordable satellite uplinks, microcell towers, and solar power has the potential to help overcome such limitations.

At the end of the day, however, technology is merely a tool — one that can help but also do harm. To maximize its potential benefits, conservationists and technologists will need to come together to determine how technology should and should not be used. But as rising populations and surging consumer demand stress the planet’s ability to sustain vital resources, humanity needs all the help it can get. Technology may not be a panacea for the world’s many environmental ills, but it could still help tip the balance toward a sustainable future.

,

Read More »Earth Observation Satellites: Helping Scientists Better Understand Global Change

August 21st, 2014

Courtesy of Yale’s Environment360, a look at several initiatives that use Earth observation satellites to measure and monitor global climate change:

1. NASA’s Orbiting Carbon Observatory (OCO-2) is one of five Earth-observing missions launched in 2014 — the most in a single year in more than a decade. OCO-2, which joins a set of five observation satellites already orbiting the Earth, will monitor the global carbon cycle by measuring how atmospheric carbon dioxide absorbs individual bands, or wavelengths, of sunlight. The satellite will collect roughly one million measurements per day, although only about a tenth of those are expected to be cloud-free enough to provide useable data. The two-year mission will allow scientists to track sources of CO2 emissions and the “sinks” where it is absorbed from the atmosphere.

2. Launched in 2010 as part of the European Space Agency’s Earth Explorer program, CryoSat-2 is the first mission specifically targeted at measuring changes in polar sea ice thickness, one indicator of warming seas. The satellite orbits 700 kilometers above the Earth at latitudes 88° North and South. It uses a Synthetic Aperture Interferometric Radar Altimeter (SIRAL) to measure changes in land ice elevation and sea ice thickness relative to ocean surface levels, giving researchers a better understanding of changes in the volume of polar sea ice. CryoSat-2’s altimeter also measures sea level, including localized ocean phenomena such as eddies, storm surges, and the ocean floor with unprecedented accuracy. This image shows Arctic sea ice as measured by CryoSat-2 in April 2013.

3. Comprising 3.5 percent of the ocean, salt plays a major role in both regulating ocean currents and moderating Earth’s climate. The Aquarius Mission, a partnership between NASA and Argentina’s space program (the Comisión Nacional de Actividades Espaciales), helps scientists better understand how surface salinity levels affect heat and water exchange between the ocean and the atmosphere. Because salinity levels are affected by precipitation, evaporation, freshwater inputs, and melting ice, scientists can use Aquarius to trace changes in the global water cycle. This image depicts sea surface salinity as measured in June 2014. Reds show higher salinity (40 grams per kilogram), and purples show relatively low salinity (30 grams per kilogram).

4. Originally created in 1978 by the United States and the French space agency to monitor meteorological and oceanographic conditions, the Argos system is now used for a variety of observations, including tracking terrestrial, avian, and aquatic wildlife migrations. Biologists attach small tags, called platforms, to wildlife, and the platforms continuously transmit signals to orbiting satellites, enabling scientists to determine wildlife locations. With 21,000 animals tagged worldwide, scientists use Argos to monitor how migratory species such as manta rays, Alaska’s porcupine caribou herd, and albatrosses adapt to global change. This image is an example of an Argos map showing platform locations.

5. Farmers are facing increasing uncertainty due to climate change. Programs such as the European Space Agency’s Sentinel-2, part of the Copernicus Land Monitoring Service, monitor soil moisture conditions and forecast crop yields so that farmers can apply water and chemicals more accurately and efficiently. In addition to site-specific crop and plant health, Copernicus satellites detect variables such as measures of global vegetation, water cycling, and heat emitted from the Earth’s surface. These technologies promote what is called “precision farming” and help farmers maintain high crop yields amid changing climatic conditions. This image, captured near Garden City, Kansas, reveals circular crop plots in infrared.

,

Read More »Google Powers Online Resource Tracking Global Deforestation

August 21st, 2014

Via Forbes, a report on a new tool that – using technologies from Google Maps and Google Earth, the World Resources Institute has created an interactive map that shows forest coverage almost in real time, marking where coverage has increased and — more often — where it’s decreased:

Last fall, I wrote about efforts by Google and Microsoft to help put some analytics muscle behind tracking various natural resources.

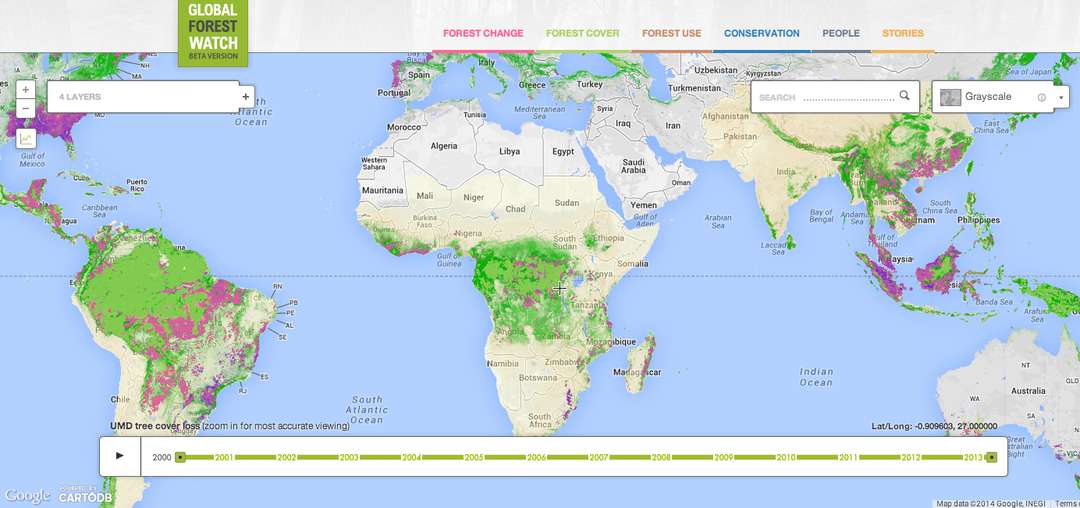

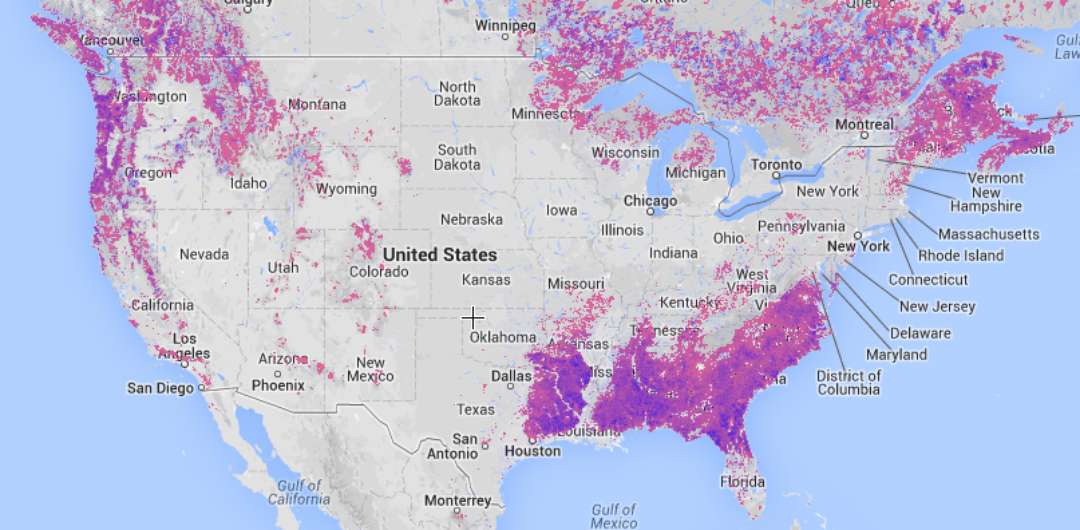

Now, Google has teamed up with the World Resources Institute (WRI) and more than 40 other organizations to create a massive, near real-time forest monitoring system, called Global Forest Watch.

The master plan is to provide a crowdsourced resource that businesses, communities and local governments can use to keep abreast of deforestation, mining and other activities that could threaten tree cover and biodiversity. It relies on Google’s cloud services and mapping resources from Esri, satellite technology, open data tools and feeds from numerous scientific sources, including daily forest fire alerts from NASA. When forest losses are detected, anyone using the resource is alerted. The data for monthly tree loss, for example, is accurate to a resolution of 50 meters; on an annual basis, the accuracy is 30 meters.

Screenshots of the Global Forest Watch Web App.

“Businesses, governments and communities desperately want better information about forests. Now they have it. … From now on, the bad guys cannot hide and the good guys will be recognized for their stewardship,” said Andrew Steer, president and CEO of WRI.

Between 2000 and 2012, the world lost 2.3 million square kilometers (or 230 million hectacres), which is the same as cutting down 50 soccer fields worth of trees every day. The most threatened regions during that timeframe were Brazil, Canada, Indonesia, Russia and the United States. (I am listing them alphabetically, not necessarily in their order of contribution to the decline.)

How would a business use this resource?

Paper companies, for example, could keep watch over timber suppliers to ensure that their concessions aren’t encroaching on rainforests. Asia Pulp & Paper intrigued many almost exactly one year ago when it embraced a far-reaching conservation strategy. Organizations that rely on palm oil, soy or beef for their products might likewise monitor how their supply chain partners are complying with local regulations for sourcing or cultivating these commodities or with sustainable resource commitments. Among two big players that are already using this tool are Nestle and Unilever.

“Deforestation poses a material risk to businesses that rely on forest-linked crops. Exposure to the risk has the potential to undermine the future of businesses,” said Unilever CEO Paul Polman. That’s one reason that the company has pledged to source all of its agricultural raw materials “sustainably.:

Here’s the thing, if your team needs to start tracking this sort of thing, why not use an open source like this one? WRI is also behind a similar resource for mapping water risks, called Aqueduct.

,

Read More »Open Water: Exploring Open Source Water Quality Monitoring

August 21st, 2014

Via MIT’s Center for Civic Media, details on an interesting project that

Over the last several months, Civic has been working on the Open Water Project, which aims to develop and curate a set of low-cost, open-source tools enabling communities to collect, interpret, and share their water quality data. Open Water is an initiative of Public Lab, a community that uses inexpensive DIY techniques to change how people see the world in environmental, social, and political terms (read more about Public Lab and the Open Water initiative here). The motivation behind Open Water derives partly from the fact that most water quality monitoring uses expensive, proprietary technology, limiting the accessibility of water quality data. Inexpensive, open-source approaches to water quality monitoring could enable groups ranging from watershed managers to homeowners to more easily collect and share water quality data.

As part of the Open Water Project, we’ve looked at other open-source water quality monitoring tools and initiatives (you can read more about those initiatives on this Public Lab research note, “What’s Going on In Water Monitoring”) and we’ve had meetups to talk about water quality and monitoring strategies (here’s a summary of an awesome water quality primer with Jeff Walker). We’ve also been working on development of the Riffle — the “Remote, Independent, and Friendly Field Logger Electronics”. The Riffle is a low-cost, open-source hardware device that will measure some of the most common water quality parameters using a design that makes it possible for anyone to build, modify, and deploy water quality sensors in their own neighborhood. Specifically, the Riffle will measure conductivity, temperature, and depth, which can serve as indicators for potential pollutants. Eventually the Riffle will be able to fit in a plastic water bottle.

A few weeks ago, Public Lab received generous support from Rackspace for an Open Water event in July, and Catherine D’Ignazio, Don Blair, and I decided we’d host a workshop focused on exploring conductivity as an important and widely-used water quality parameter. In addition to facilitating a group discussion on the topic, we hoped we could work together on prototyping simple, inexpensive and creative ways of measuring conductivity.

We started the workshop with a discussion around water quality monitoring and community support structures, including what a distributed water quality monitoring effort might look like (and some of the associated challenges) and strategies for developing community support (e.g. ‘tool libraries’ that include water quality monitoring tools). We also talked about the ways in which inexpensive, non-proprietary sensors might allow for new and important questions to be asked and answered in water quality, and how we might calibrate open hardware sensors.

Next, we had a mini lecture on conductivity measurements from Craig Versek and dove into creating a resistance-dependent oscillation circuit using the 555 timer (a fairly simple circuit). The idea behind the circuit is that oscillation frequency of the output will increase as the resistance value decreases (as a result, an LED will blink at different rates or a piezo buzzer will click at different rates). In our case, the resistance value derives from the water source connected to the circuit; thus, the LED blinking (or piezo buzzing) corresponds with conductivity (inversely, resistance) of the water.

Participants breadboard the conductivity circuit.Before the workshop, Craig Versek had measured out various amounts of table salt in order for us to be able to prepare water samples whose salinity matched some real world examples (here’s a table of salinity values for common water sources). After building the circuits, we explored how solutions of varying salinity affected the rate of oscillation in our circuits, by watching the LED blink rate change as we dipped the probe into the various samples. We then tried replacing the LED with a piezo buzzer and listening to the results. Some folks in the workshop even went so far as to connect the circuit via audio jack to a computer, and then use an open-source, browser-based pitch detector to associate specific pitches with water samples.

By the end of the workshop, we had:

- built simple, cheap 555 conductivity meters on a breadboard

- demonstrated that we could distinguish solutions of varying salinity from one another using this circuit

- added an audio component to the circuit via a piezo buzzer, allowing one to ‘hear’ the conductivity of solutions

- wired up an audio jack to the circuit, so that the resultant audio could be recorded on a smartphone or laptop

- tested out browser-based pitch detection software — different levels of conductivity can now be assessed using only the browser!

There’s much more to explore in conductivity measurements and in water quality monitoring in general, and we’ll be hosting more Open Water workshops to explore open-source water quality monitoring techniques. To learn more about the project, visit Public Lab’s Open Water project page.

,

Read More »